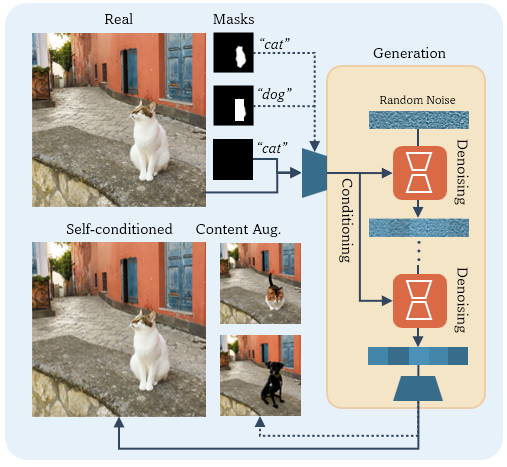

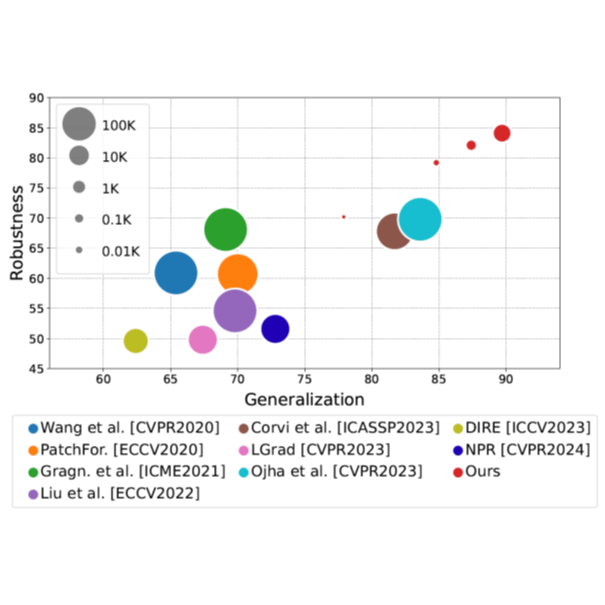

Successful forensic detectors can produce excellent results in supervised learning benchmarks but struggle to transfer to real-world applications. We believe this limitation is largely due to inadequate training data quality. While most research focuses on developing new algorithms, less attention is given to training data selection, despite evidence that performance can be strongly impacted by spurious correlations such as content, format, or resolution. A well-designed forensic detector should detect generator specific artifacts rather than reflect data biases. To this end, we propose B-Free, a bias-free training paradigm, where fake images are generated from real ones using the conditioning procedure of stable diffusion models. This ensures semantic alignment between real and fake images, allowing any differences to stem solely from the subtle artifacts introduced by AI generation. Through content-based augmentation, we show significant improvements in both generalization and robustness over state-of-the-art detectors and more calibrated results across 27 different generative models, including recent releases, like FLUX and Stable Diffusion 3.5. Our findings emphasize the importance of a careful dataset curation, highlighting the need for further research in dataset design

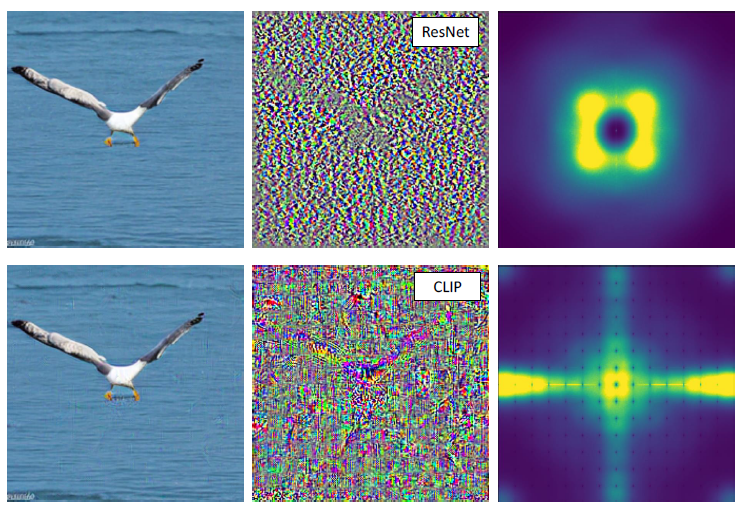

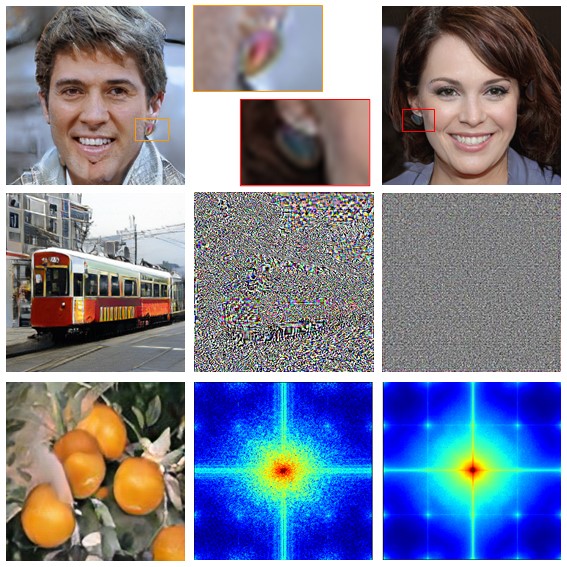

In recent years, many forensic detectors have been proposed to detect AI-generated images and prevent their use for malicious purposes. Convolutional neural networks (CNNs) have long been the dominant architecture in this field and have been the subject of intense study. However, recently proposed Transformer-based detectors have been shown to match or even outperform CNN-based detectors, especially in terms of generalization. In this paper, we study the adversarial robustness of AI-generated image detectors, focusing on Contrastive Language-Image Pretraining (CLIP)-based methods that rely on Visual Transformer (ViT) backbones and comparing their performance with CNN-based methods. We study the robustness to different adversarial attacks under a variety of conditions and analyze both numerical results and frequency-domain patterns. CLIP-based detectors are found to be vulnerable to white-box attacks just like CNN-based detectors. However, attacks do not easily transfer between CNN-based and CLIP-based methods. This is also confirmed by the different distribution of the adversarial noise patterns in the frequency domain. Overall, this analysis provides new insights into the properties of forensic detectors that can help to develop more effective strategies.

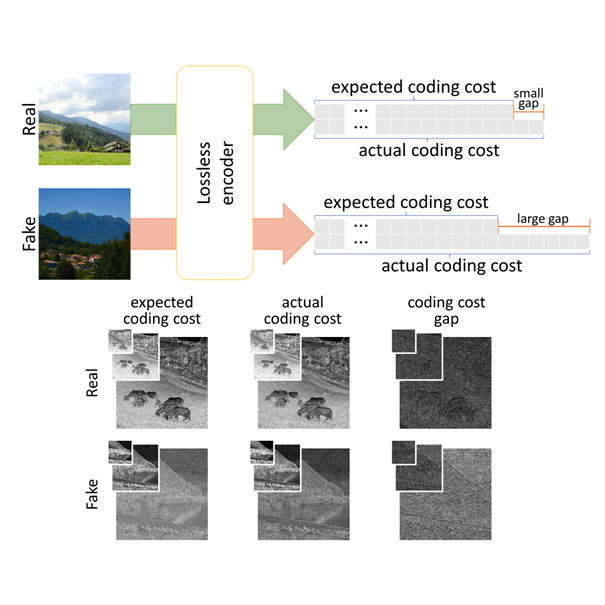

Detecting AI-generated images has become an extraordinarily difficult challenge as new generative architectures emerge on a daily basis with more and more capabilities and unprecedented realism. New versions of many commercial tools, such as DALL·E, Midjourney, and Stable Diffusion, have been released recently, and it is impractical to continually update and retrain supervised forensic detectors to handle such a large variety of models. To address this challenge, we propose a zero-shot entropy-based detection method (ZSdet) that neither needs AI-generated training data nor relies on knowledge of generative architectures to artificially synthesize their artifacts. Inspired by recent works on machine-generated text detection, our idea is to measure how surprising the image under analysis is compared to a model of real images. To this end, we rely on a lossless image encoder that is able to estimate the probability distribution of each pixel given its context. To ensure computational efficiency, the encoder has a multi-resolution architecture and contexts comprise mostly pixels of the lower-resolution version of the image. Since only real images are needed to learn the model, the detector is independent of generator architectures and synthetic training data. Using a single discriminative feature, the proposed detector achieves state-of-the-art performance. On a wide variety of generative models it achieves an average improvement of more than 3% over the SoTA in terms of accuracy.

The aim of this work is to explore the potential of pretrained vision-language models (VLMs) for universal detection of AI-generated images. We develop a lightweight detection strategy based on CLIP features and study its performance in a wide variety of challenging scenarios. We find that, contrary to previous beliefs, it is neither necessary nor convenient to use a large domain-specific dataset for training. On the contrary, by using only a handful of example images from a single generative model, a CLIP-based detector exhibits surprising generalization ability and high robustness across different architectures, including recent commercial tools such as Dalle-3, Midjourney v5, and Firefly. We match the state-of-the-art (SoTA) on in-distribution data and significantly improve upon it in terms of generalization to out-of-distribution data (+6% AUC) and robustness to impaired/laundered data (+13%).

In this work, we present an overview of approaches for the detection and attribution of synthetic images and highlight their strengths and weaknesses. We also point out and discuss hot topics in this field and outline promising directions for future research.

High-quality artificially generated images are widely available now and increasingly realistic, posing challenges for image forensics in distinguishing them from real ones. Unfortunately, building a single detector that generalizes well to unseen generators is very difficult, creating the need for diverse cues. In this paper, we show that natural and synthetic images differ in their color statistics, possibly due to the widely used perceptual loss, which is more sensitive to brightness than to chroma differences. Consequently, color statistics offer valuable cues for forensic analysis and the development of robust detectors. Our experiments using simple hand-crafted color functions with a random forest achieve 91% accuracy averaged over all tested Diffusion Models, even with limited training samples.

The ability to detect manipulated visual content is becoming increasingly important in many application fields, given the rapid advances in image synthesis methods. Of particular concern is the possibility of modifying the content of medical images, altering the resulting diagnoses. Despite its relevance, this issue has received limited attention from the research community. One reason is the lack of large and curated datasets to use for development and benchmarking purposes. Here, we investigate this issue and propose M3Dsynth, a large dataset of manipulated Computed Tomography (CT) lung images. We create manipulated images by injecting or removing lung cancer nodules in real CT scans, using three different methods based on Generative Adversarial Networks (GAN) or Diffusion Models (DM), for a total of 8,577 manipulated samples. Experiments show that these images easily fool automated diagnostic tools. We also tested several state-of-the-art forensic detectors and demonstrated that, once trained on the proposed dataset, they are able to accurately detect and localize manipulated synthetic content, including when training and test sets are not aligned, showing good generalization ability.

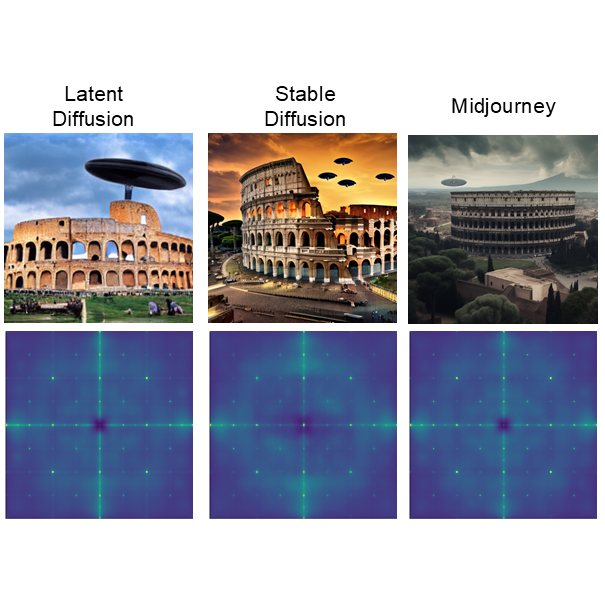

Over the past decade, there has been tremendous progress in creating synthetic media, mainly thanks to the development of powerful methods based on generative adversarial networks (GAN). Very recently, methods based on diffusion models (DM) have been gaining the spotlight. In addition to providing an impressive level of photorealism, they enable the creation of text-based visual content, opening up new and exciting opportunities in many different application fields, from arts to video games. On the other hand, this property is an additional asset in the hands of malicious users, who can generate and distribute fake media perfectly adapted to their attacks, posing new challenges to the media forensic community. With this work, we seek to understand how difficult it is to distinguish synthetic images generated by diffusion models from pristine ones and whether current state-of-the-art detectors are suitable for the task. To this end, first we expose the forensics traces left by diffusion models, then study how current detectors, developed for GAN-generated images, perform on these new synthetic images, especially in challenging social-networks scenarios involving image compression and resizing.

Detecting fake images is becoming a major goal of computer vision. This need is becoming more and more pressing with the continuous improvement of synthesis methods based on Generative Adversarial Networks (GAN), and even more with the appearance of powerful methods based on Diffusion Models (DM). Towards this end, it is important to gain insight into which image features better discriminate fake images from real ones. In this paper we report on our systematic study of a large number of image generators of different families, aimed at discovering the most forensically relevant characteristics of real and generated images. Our experiments provide a number of interesting observations and shed light on some intriguing properties of synthetic images: (1) not only the GAN models but also the DM and VQ-GAN (Vector Quantized Generative Adversarial Networks) models give rise to visible artifacts in the Fourier domain and exhibit anomalous regular patterns in the autocorrelation; (2) when the dataset used to train the model lacks sufficient variety, its biases can be transferred to the generated images; (3) synthetic and real images exhibit significant differences in the mid-high frequency signal content, observable in their radial and angular spectral power distributions.

The widespread diffusion of synthetically generated content is a serious threat that needs urgent countermeasures. As a matter of fact, the generation of synthetic content is not restricted to multimedia data like videos, photographs or audio sequences, but covers a significantly vast area that can include biological images as well, such as western blot and microscopic images. In this paper, we focus on the detection of synthetically generated western blot images. These images are largely explored in the biomedical literature and it has been already shown they can be easily counterfeited with few hopes to spot manipulations by visual inspection or by using standard forensics detectors. To overcome the absence of publicly available data for this task, we create a new dataset comprising more than 14K original western blot images and 24K synthetic western blot images, generated using four different state-of-the-art generation methods. We investigate different strategies to detect synthetic western blots, exploring binary classification methods as well as one-class detectors. In both scenarios, we never exploit synthetic western blot images at training stage. The achieved results show that synthetically generated western blot images can be spot with good accuracy, even though the exploited detectors are not optimized over synthetic versions of these scientific images. We also test the robustness of the developed detectors against post-processing operations commonly performed on scientific images, showing that we can be robust to JPEG compression and that some generative models are easily recognizable, despite the application of editing might alter the artifacts they leave.

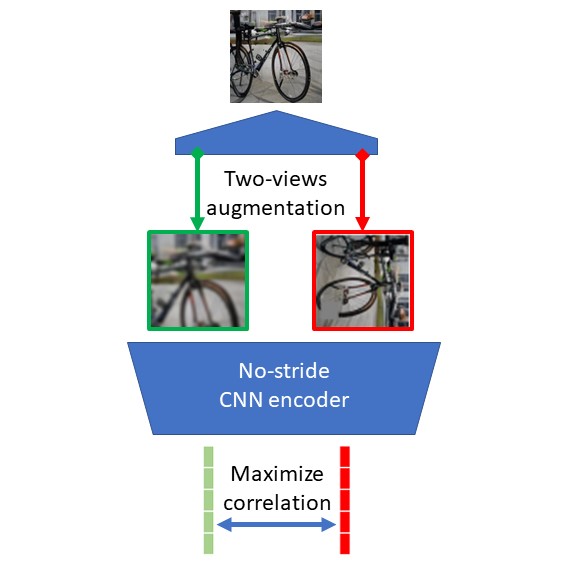

The ever higher quality and wide diffusion of fake images have spawn a quest for reliable forensic tools. Many GAN image detectors have been proposed, recently. In real world scenarios, however, most of them show limited robustness and generalization ability. Moreover, they often rely on side information not available at test time, that is, they are not universal. We investigate these problems and propose a new GAN image detector based on a limited sub-sampling architecture and a suitable contrastive learning paradigm. Experiments carried out in challenging conditions prove the proposed method to be a first step towards universal GAN image detection, ensuring also good robustness to common image impairments, and good generalization to unseen architectures.

The advent of deep learning has brought a significant improvement in the quality of generated media. However, with the increased level of photorealism, synthetic media are becoming hardly distinguishable from real ones, raising serious concerns about the spread of fake or manipulated information over the Internet. In this context, it is important to develop automated tools to reliably and timely detect synthetic media. In this work, we analyze the state-of-the-art methods for the detection of synthetic images, highlighting the key ingredients of the most successful approaches, and comparing their performance over existing generative architectures. We will devote special attention to realistic and challenging scenarios, like media uploaded on social networks or generated by new and unseen architectures, analyzing the impact of suitable augmentation and training strategies on the detectors’ generalization ability.

Thanks to the fast progress in synthetic media generation, creating realistic false images has become very easy. Such images can be used to wrap rich fake news with enhanced credibility, spawning a new wave of high-impact, high-risk misinformation campaigns. Therefore, there is a fast-growing interest in reliable detectors of manipulated media. The most powerful detectors, to date, rely on the subtle traces left by any device on all images acquired by it. In particular, due to proprietary in-camera processes, like demosaicing or compression, each camera model leaves trademark traces that can be exploited for forensic analyses. The absence or distortion of such traces in the target image is a strong hint of manipulation. In this paper, we challenge such detectors to gain better insight into their vulnerabilities. This is an important study in order to build better forgery detectors able to face malicious attacks. Our proposal consists of a GAN-based approach that injects camera traces into synthetic images. Given a GANgenerated image, we insert the traces of a specific camera model into it and deceive state-of-the-art detectors into believing the image was acquired by that model. Likewise, we deceive independent detectors of synthetic GAN images into believing the image is real. Experiments prove the effectiveness of the proposed method in a wide array of conditions. Moreover, no prior information on the attacked detectors is needed, but only sample images from the target camera.

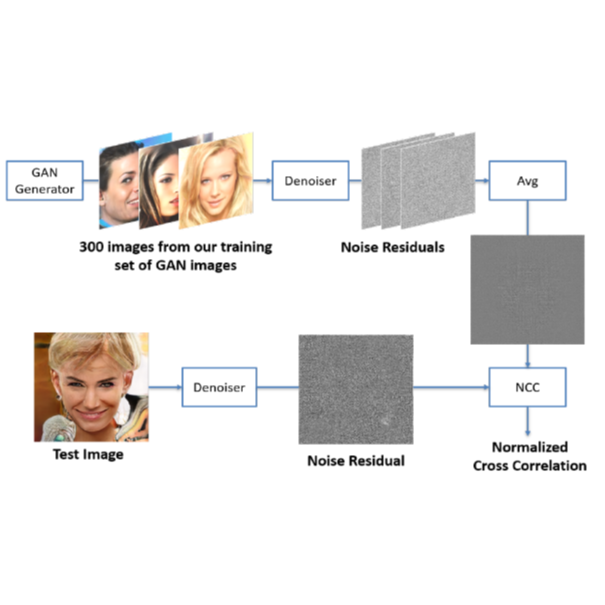

In the last few years, generative adversarial networks (GAN) have shown tremendous potential for a number of applications in computer vision and related fields. With the current pace of progress, it is a sure bet they will soon be able to generate high-quality images and videos, virtually indistinguishable from real ones. Unfortunately, realistic GAN-generated images pose serious threats to security, to begin with a possible flood of fake multimedia, and multimedia forensic countermeasures are in urgent need. In this work, we show that each GAN leaves its specific fingerprint in the images it generates, just like real-world cameras mark acquired images with traces of their photo-response non-uniformity pattern. Source identification experiments with several popular GANs show such fingerprints to represent a precious asset for forensic analyses.

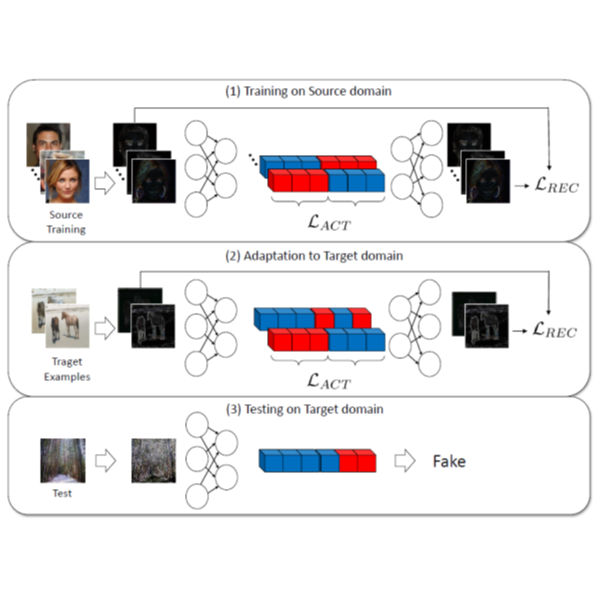

Distinguishing manipulated from real images is becoming increasingly difficult as new sophisticated image forgery approaches come out by the day. Naive classification approaches based on Convolutional Neural Networks (CNNs) show excellent performance in detecting image manipulations when they are trained on a specific forgery method. However, on examples from unseen manipulation approaches, their performance drops significantly. To address this limitation in transferability, we introduce Forensic-Transfer (FT). We devise a learning-based forensic detector which adapts well to new domains, i.e., novel manipulation methods and can handle scenarios where only a handful of fake examples are available during training. To this end, we learn a forensic embedding based on a novel autoencoder-based architecture that can be used to distinguish between real and fake imagery. The learned embedding acts as a form of anomaly detector; namely, an image manipulated from an unseen method will be detected as fake provided it maps sufficiently far away from the cluster of real images. Comparing to prior works, FT shows significant improvements in transferability, which we demonstrate in a series of experiments on cutting-edge benchmarks. For instance, on unseen examples, we achieve up to 85% in terms of accuracy, and with only a handful of seen examples, our performance already reaches around 95%.